Let’s not overcomplicate it: by the current standard, a nautical mile is exactly 1,852 meters (or 6,076 feet). That’s true worldwide. This neat certainty, though, is surprisingly recent. The history of the nautical mile is long and messy; the modern definition is comparatively young.

The word “mile” comes from the Roman mile, from the Latin “mille passus,” meaning “a thousand paces” (where one “pace” was really a double step, the distance between two prints of the same foot). Its length varied quite a bit, depending on who was pacing and under what conditions. The first standardisation occurred in 29 BC, when Agrippa defined the mile as 5,000 Roman feet and set the foot by the length of his own foot. Today it’s estimated that this mile was about 1,480 meters.

In the 15th century, geographers began using latitude and longitude as coordinates on an Earth modelled as a sphere. At the same time, various attempts were made to link length units to the circumference of the Earth. Many definitions were published tying contemporary units to one degree of latitude. Given the big uncertainties in measuring Earth’s true size back then, results differed widely. Authors “fixed” things in different ways: sometimes by changing how many units fit into a degree, other times by changing the unit’s length while keeping the count per degree.

Around the same period, practical navigation embraced the log and dead reckoning. To measure speed, sailors used a line with knots tied at intervals, running freely off a reel after throwing a float overboard. A 30-second sandglass measured the time; speed was the number of knots that slipped through the navigator’s hands in that interval. In this setup, the actual length of a nautical mile was effectively set by the spacing between the knots.

The Earth is not a sphere

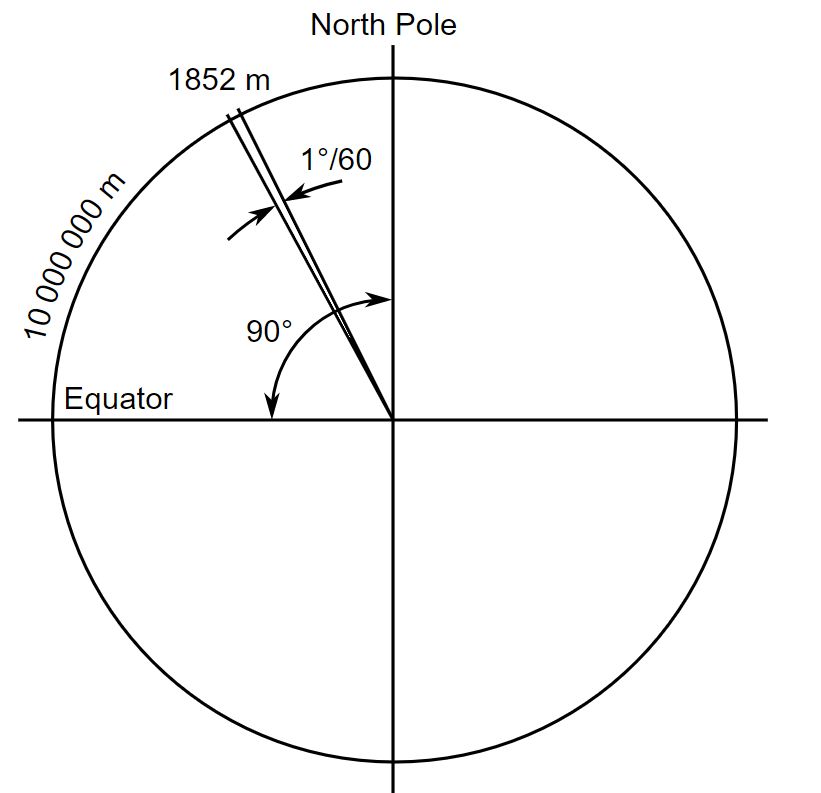

A consensus gradually emerged that one nautical mile should correspond to one minute of latitude (so 60 miles per degree). English mathematician Robert Hues proposed this as early as 1594. But the actual length remained contentious, especially once it became clear that Earth isn’t a perfect sphere but an oblate spheroid. A mile defined this way would have different lengths at different latitudes.

In the English-speaking world, a definition based on the Clarke ellipsoid (published in 1866 as a good approximation of Earth’s shape) took hold. The mile was defined as the length of one minute of arc along the meridian of a sphere having the same surface area as the Clarke ellipsoid. The calculation yielded 1,853.2480 meters, or 6,080.210 feet, per nautical mile. The British Admiralty adopted a standard length of 6,080 feet; the United States used 6,080.21 feet.

In France, the meter was defined in 1791 as one ten-millionth of the distance from the North Pole to the equator along a meridian. From this, a nautical mile works out to 1,851.85 meters.

The order finally arrived with the Extraordinary International Hydrographic Conference in Monaco in 1929, where participating states agreed on the nautical mile as 1,852 meters. Even then, adoption took time: the United States acceded in 1954, and the United Kingdom didn’t officially accept it until 1970.